First, my web domain, Signals and Noises, is half pun, half tip-of-the-hat to Claude Shannon and Warren Weaver's 1949 publication The Mathematical Theory of Communication. It essentially laid the groundwork for what is now commonly referred to as information theory, in which the concepts of 'signal' and 'noise' play central roles. Simply put, the term 'signal' refers to any message, regardless of medium: spoken dialog, music, telephony, internet stream, text, images, radio or television broadcast, photons from a distant galaxy, etc. The term 'noise' includes anything that obscures that message: static, transmission gaps and errors, interference, cancellation, echo, harmonic distortion, scratches on a phonograph record, coffee stains, typos, torn paper, missing pages, cloud cover, etc. The signal-to-noise ratio (SNR), a term familiar to all audiophiles, is a measure of the fidelity of the received message to the original signal. Noise is intimately related to the universal concept of entropy, or decay. At the same time, signals often include redundancy as a form of error-correction, which is to say, of increasing the signal-to-noise ratio. For example, natural language (including writing) is highly redundant: yu cn rd ths sntnce evn f mst f th vwls r mssng. James Gleick's book, The Information: A History, a Theory, a Flood, is a very readable introduction to information theory and its significance.

Let's move on to the last phrase of the epigraph on my home page: "sources of semi-autonomous authority." By which I mean authorship, or in a broader sense, agency: the act of making essential decisions about any or every aspect of a work under construction. Perhaps we might say that authors are humans, while agents are algorithms; in either case, someone or something that causes something to be done. Autonomous agents do what they want all by themselves; semi-autonomous agents not so much: constraints are placed upon them, and they operate within a small realm.

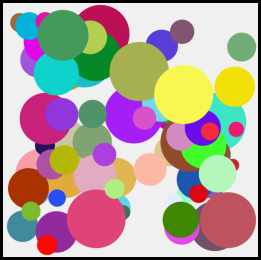

Here's a quick example illustrating the difference provided by Lali Barrière. The creative agent, in this case, is an algorithm that draws circles of random diameters and colors within a rectangular boundary. In the first image below (left), the algorithm is allowed to "run wild," there are virtually no constraints on the circles' size, color or position. In the second image (right), small constraints have been imposed. The range of permitted diameters is smaller, the range of color never strays from the line between green and black, and perhaps most importantly, the locations of the circles are relegated to a grid. To my eye, the second composition is far more arresting and aesthetically pleasing, perhaps because the imposition of constraints effectively transforms a random process into a loose kind of pattern that is more relatable than pure noise. Humans like patterns.

This might be a good spot for clarification. The term 'random' is actually a hot topic in science and math. Is anything truly random? Or is there an ultimate cause—albeit one which remains beyond our ability to perceive? The random number generator available on most computers is, in fact, a pseudo-random number generator: the algorithm that produces the sequence of apparently uncorrelated numbers is entirely deterministic. If you "seed" the algorithm with the same number every time you run it, then it produces precisely the same "random" sequence. Which is why, in most applications, the algorithm is seeded with the current time and date so that you cannot predict what it will produce, and it will be different every time it is run. Prediction, or more precisely, lack thereof, is key to what we understand as randomness. Prediction is not possible, and patterns are not discernable.

I encountered my first computer in 1981, a DEC VAX-11/780 that was the size of three refrigerators standing side by side. It also required an air conditioner slightly larger than the computer itself, and had cake-platter disk drives each the size of a washing machine. The analog-to-digital/digital-to-analog converter occupied fully half of a 6-ft tall 19-inch rack. It had less computing power than your average smartphone today. I was enchanted, and I remember my overwhelming desire being nothing more than to teach it to sing.

But how to do so? Computers excel at producing mechanically perfect bleeps and blops, but who's going to dance (or cry) to that? So, you start thinking about undermining the rigid mechanical perfection in an effort to "humanize" or "naturalize" the computer's output. One of the easiest and most obvious ways (on a computer, at least) to make the perfection imperfect is to inject randomness. That does shake things up a bit, and applied judiciously can enrich whatever is being synthesized. But are we to suppose that randomness is a good model, or substitute, for the human touch, for a natural feel?

No, probably not. Humans don't really behave (or perform music, or create art) randomly. Randomness is not what distinguishes humans from machines. Randomness certainly does exist in nature, but to my way of looking at things, even casual observation indicates that randomness is not the fundamental organizing principle of the universe. Instead, everywhere we look, what we see are patterns and hierarchies. There's something more complex going on. All Douglas-firs resemble each other, but they are not all alike.

I was looking for a generating agent that matched, or approached, the types of structures and behaviors that we observe in nature: an agent capable of a kind of randomness, but with a bit more nuance and inner cohesion. In the 1960s, the meteorologist Edward Lorenz had discovered that precise long-term weather prediction is impossible, because we can never have perfect knowledge of the current state of the atmosphere in all places around the globe. Thus, any model of atmospheric behavior that extrapolates from current conditions will always diverge from the behavior of the real atmosphere over time. This principle is known as sensitive dependence on initial conditions: even minute differences in the initial starting point can (and will) lead to significant and unpredictable changes in long-term behavior. Over the next few decades, this insight—that deterministic nonlinear systems are capable of unpredictable or chaotic behavior—began to permeate research across many disciplines—mathematics, physics, biology, chemistry, medicine, economics, and sociology. Researchers became aware of the ubiquity of nonlinear dynamical systems (a.k.a. chaotic systems) and fractal structures throughout nature, including human behavior, society, and physiology. I had the good fortune to meet Henry Abarbanel, at that time the director of the Institute for Nonlinear Science at UCSD, who introduced me to the families of chaotic systems. Benoit Mandelbrot's highly influential book The Fractal Geometry of Nature had also just come out. Eventually it came to be understood that chaos and fractals are two sides of the same coin: fractals are the footprints left behind by chaotic processes. Mountains and coastlines—quintessential examples of fractals in nature—result from the combined nonlinear dynamics of plate tectonics and the weather. One of the most useful textbooks I had on the subject was J.M.T. Thompson and H.B. Stewart's Nonlinear Dynamics and Chaos: Geometrical Methods for Engineers and Scientists, published in 1986. James Gleick made chaos theory accessible to non-engineers and non-scientists with the publication of Chaos: Making a New Science in 1987.

In retrospect, the employment of chaos, fractals, stochastic procedures, and information theory (Markov chains, data manipulation) in music and art can be seen as simply the latest manifestation of what has been a hallmark of art practice since the early twentieth century: the use of chance operations and numeric procedures as generators of the new, the unexpected and provocative—to both the artist and to the public. We see this beginning with the Dadaists, Duchamp's readymades, the frequent use of found objects, the serial techniques of the Second Viennese School and their modern-day disciples, the splatter paintings (or "action" paintings) of Pollock and de Kooning, the "indeterminate music" of Cage and Feldman, and on and on. Artists consciously employ chance operations as a foil to their ingrained tendencies, just to make something that they would otherwise never think of, forcing themselves out of their own comfort zone.

Most of the works made available on this website employ some form of chaos theory, constrained randomness, game theory, numerical (or numerological—the difference being the added element of superstition) procedure, text or data strings from outside sources, Markov chains, et cetera; both on a macro scale (architecture of the work as a whole) and on a micro scale (surface texture, details of individual notes); in either case, filtered through my own taste and sensibilities. This is not to say that there are no other influences at play—these techniques comprise only one set of tools in my box. I also have a deep interest in language and phonetics, harmonic theory reaching back to the days of Pythagoras, contemporary tonal theory as espoused by the Berklee School in Boston [and a more recent discovery (ten years after its publication): Dmitri Tymoczko's A Geometry of Music: Harmony and Counterpoint in the Extended Common Practice], the interaction of "vernacular" and "cultivated" forms of music throughout history, hip-hop, dance, physics, biology, math, eco-systems (and their collapse), economic systems, political systems, nonlinear dynamical systems in general. All of these things play a role in my work. Please enjoy.